YouTube is not only the world’s largest video platform but also a powerhouse for music discovery and consumption. Every day, YouTube's recommendation system connects millions of viewers to billions of videos, driving far more watch time on the platform than channel subscriptions or active search.

In 2025, YouTube reported over 2.5 billion monthly active users, with more than 125 million paying Premium subscribers — all of whom have full access to YouTube Music, the platform’s dedicated music app. In comparison, Spotify, the world’s largest dedicated music streaming service, counts around 675 million monthly users, of which 276 million are paying subscribers.

Even if only a portion of users engage with YouTube Music directly, the potential reach for music on YouTube is almost four times that of Spotify. That arguably makes YouTube the world’s largest free-tier streaming platform — and a key visual-first discovery engine for music, one where recommendation algorithms quietly decide which songs, artists, and trends reach billions of users.

For artists and music professionals, understanding how YouTube’s recommendation system works is key to reaching audiences in this algorithm-driven world. Yet even as these systems grow more influential by the day, the professional community still tends to view them as black boxes. Music marketers, labels, and artist managers rely on algorithms across YouTube, Spotify, and TikTok to amplify reach, drive ROI, and connect with fans — often without a clear sense of how these systems decide what content gets recommended.

At Music Tomorrow, our mission is to bring transparency to these hidden layers of the digital music industry. That’s why we’ve built tools that help artists and professionals see how their music is positioned across streaming recommendation ecosystems. Every new user joining our platform gets a free artist analysis — a first step toward understanding not just how your music is discovered, but how to make algorithmic discovery work in your favor.

How the YouTube Recommender Works

Every minute, more than 360 hours of new content are uploaded to YouTube — from vlogs and gaming streams to podcasts and concert recordings. Music is just one category in this sea of content, but even it can take many forms: official videos, live performances, lyric videos, fan uploads, and visualizers — all competing for attention on the world’s largest video platform, managed by a recommender that must account for every video and every user.

YouTube Music, by contrast, operates within a focused, audio-first environment — built for off-screen audio consumption. Its recommender powers features that streaming users expect: personalized mixes, discovery-driven playlists and feeds, artist radios, and autoplay queues.

While the context differs between the two platforms, the core recommendation challenge remains the same: finding the perfect piece of content for every user at any given moment. To do this, the system must understand both the content and the users it recommends it to.

Most industrial recommender systems combine two complementary methods to describe content on the platform:

- Content-based filtering, which describes items by analyzing the content itself. In YouTube’s case, the system processes signals like audio, visuals, video titles, descriptions, and tags — allowing it to understand how each video compares to others on the platform.

- Collaborative filtering, which identifies relationships between videos based on user behavior. Two videos become more similar as more of the same viewers watch them.

Collaborative filtering allows recommenders to uncover deep content relations that go beyond surface-level similarity. However, because it depends on user data, relying solely on a collaborative approach can lead to the classic cold-start problem. Content-based filtering fills that gap by leveraging “objective” features like meta tags or descriptions when user interaction data is sparse.

YouTube’s video recommender likely leans heavily on collaborative signals. Hosting everything from 12-hour live mixes to 10-second meme clips, the platform must remain content-agnostic to accommodate that diversity — relying too much on content features could introduce bias. Thanks to its massive user base, however, YouTube excels at collaborative representation: the system can detect — and amplify — even small emerging trends.

On the user modeling side, the same collaborative logic applies in reverse: two users are similar if they watch the same videos. For each recommendation, YouTube models the user as a complex, evolving “vector” built from three main types of data:

- Long-term watch history, which captures a user’s historic interests and preferences.

- Short-term session behavior, which reflects current context and intent.

- Demographic and environmental data, which enriches user profile and context based on current device, location, time of day, etc.

Once users and videos are represented in the same high-dimensional space, the recommender can determine which videos are most likely to resonate with each viewer — and start serving recommendations.

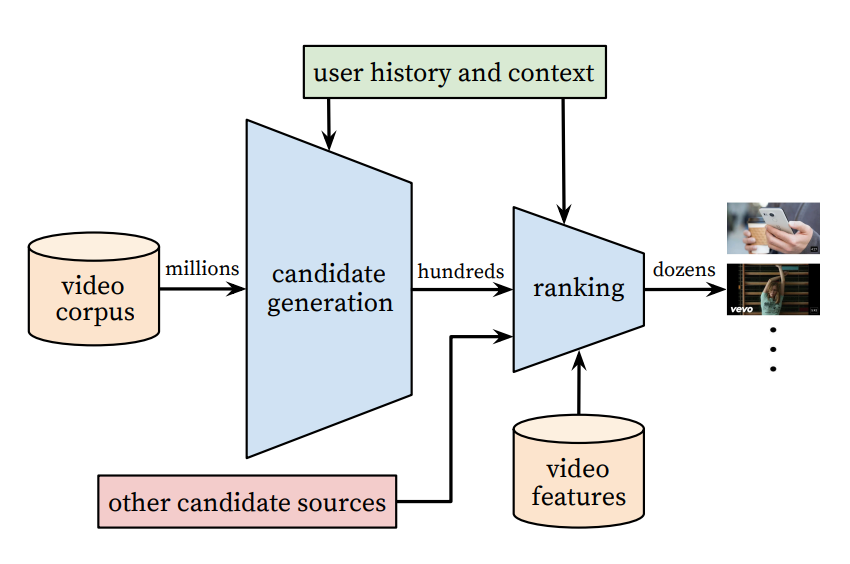

Each recommendation is a multi-stage process. The first step is candidate generation (or retrieval): the system uses lightweight models to filter billions of videos down to a few hundred that might interest the user. Once a set of candidate videos (or tracks) is retrieved, YouTube’s ranking algorithms take over. This stage involves more computationally heavy models that score each candidate to produce a personalized, ordered list of recommendations. The ranking model evaluates hundreds of features about the user, the content, and their interaction — far beyond what’s feasible during candidate generation.

YouTube Recommendation System Architecture, 2016

Source: Google Research

This multi-stage setup — retrieval followed by ranking — was outlined in Google’s foundational 2016 paper on YouTube’s recommender system and likely still forms the backbone of how recommendations work today. But how does this process apply to music?

How YouTube Algorithms Understand Music

Music on YouTube takes many forms: recorded DJ sets, livestreams, album visualizers, fan edits, and official music videos. All these formats coexist with non-music content — and the recommender must process them all through the same “neutral” lens.

This is where YouTube’s system differs greatly from audio-first recommenders like Spotify’s. While Spotify can describe a song using musical attributes such as tempo or key, YouTube relies on multimodal understanding — combining audio, visual, and text inputs.

For artists, this means metadata and presentation are critical. Detailed and relevant video descriptions and tags not only improve search performance but also help the recommender understand your track. Polished visuals keep viewers engaged and help the system infer the mood you’re aiming to create.

Still, because YouTube’s engine relies heavily on collaborative filtering, audience behavior will ultimately define your video’s trajectory and place within the platform’s ecosystem. The system learns from who watches, for how long, and what they do next. A small but engaged audience can help position your video in the right niche, while broad, low-intent traffic risks confusing the system — and leaving your content stuck in algorithmic limbo.

Things look a bit different on YouTube Music, the platform’s dedicated music domain. In 2022, Google’s research team introduced MuLan, a neural network trained to learn a shared representation of music audio and natural-language descriptions — clearly designed to improve YouTube Music recommendations. The model combines audio data with text — titles, descriptions, comments, and playlist information — to classify songs by genre, mood, instrumentation, or other descriptive tags.

If integrated at scale, models like MuLan would allow YouTube to recognize a track featuring “lo-fi chill beats” or “heavy guitar riffs”; assign it to an emerging “POV: Indie Pop” genre; or detect that a song fits a relaxing, chill mood — even with limited metadata. Interestingly, the model also incorporates user comments, leveraging cross-domain data from YouTube’s main video platform. That means a comment like “what a gem!” under a YouTube video from ten years ago could become a signal for the recommender on YouTube Music.

This system brings YouTube Music’s recommender closer to state-of-the-art music recommenders, with a few key advantages picked up along the way: YouTube’s massive free audience allows the platform to supercharge collaborative filtering, while cross-domain data (like video descriptions and comments) create an edge for content-based representations.

This brings us up to speed with how YouTube algorithms ingest and describe the vast body of music available on the platform — but what about the users?

How YouTube Algorithms Understand Users (and Their Tastes in Music)

To model user recommendation queries, YouTube leverages both long-term watch history and session-based context. From a music perspective, long-term preference modeling allows the platform to understand a user's tastes — their preferred genres, artists, moods, and scenes. Over months or even years of usage, the system gathers a rich history of user behavior: channels followed, searches made, and interactions such as likes, skips, or comments. All these signals feed into a persistent user embedding that captures general taste.

Short-term signals and session context are equally important. YouTube’s algorithm pays attention to what the user is doing right now: are they watching headphone reviews, shopping guides, or finishing a music video? These recent actions provide context, allowing the system to adapt seamlessly to countless usage patterns. This is what makes YouTube — originally a video recommender — such a critical platform for music. Any of the platform’s billions of users is just a few clicks away from a personalized music flow.

On YouTube Music, the system applies Transformer-based models to make better sense of user sequences. These models process recent actions — plays, skips, likes — and determine which past behaviors are most relevant to the current session.

Google’s research team illustrates the model in action with the following scenario: a user usually skips fast-tempo songs but enjoys them during workouts. Using the transformer-based approach, the recommender learns this context — recommending uptempo tracks during workout sessions without permanently changing the user’s overall taste profile.

Technically, the Transformer model assigns attention weights to past actions based on the current sequence. In practice, this means it recognizes contextual signals — like being at the gym — and downplays irrelevant long-term preferences. This allows the recommender to be context-aware. YouTube’s Music system doesn’t just consider what users did, but why — interpreting intent alongside behavior.

This intent component plays an increasingly important role in music recommendations across all DSPs, opening up new avenues for marketing in a world where digital consumption is increasingly functional. Listeners expect music that matches their current activity, and recommenders are there to provide the soundtrack.

Now that the recommender understands its users and their context, how does it decide what actually gets recommended?

How YouTube Algorithms Decide What Music Gets Recommended

Once a set of candidate videos or tracks is retrieved, ranking models evaluate hundreds of features about the user, the content, and their interaction — far beyond what’s feasible in the candidate generation stage. The model’s task is to predict how likely the user is to engage positively with each candidate video or song if it’s shown.

Originally, YouTube optimized its ranking for watch time — under the logic that if a user spends more time watching, the content must be satisfying. This was a major shift from optimizing for click-through rate, since clicks alone can’t measure satisfaction. By training models to predict expected watch time, YouTube’s ranking system learned to favor videos that users actually consume, surfacing engaging content over clickbait.

Over time, the ranking algorithm evolved to juggle multiple objectives beyond watch time. Google’s researchers describe a multitask ranking system that simultaneously optimizes for engagement and satisfaction. Engagement focuses on implicit metrics, including views, watch duration, and retention, while satisfaction goals involve explicit actions like likes or positive ratings. The result is a weighted blend that optimizes for long-term satisfaction — producing recommendations tuned for genuine user experience rather than raw clicks.

Finally, a post-ranking filtering step applies business rules or diversity constraints to finalize the recommendation output. For example, the system may filter out already-watched videos or limit consecutive videos from the same channel or artist. On YouTube Music, post-filtering ensures playlists don’t feature multiple songs by the same artist in sequence, promoting variety.

Now, with all that in mind, how can you ensure your music thrives on the platform? The ranking logic outlined above means there’s no magic shortcut to “gaming” the system — no perfect keyword or hashtag that guarantees your music gets recommended, no one-size-fits-all ad campaign setup that would “hack the algorithm”. The songs that get recommended are those that fit users’ tastes and intent, and that have historically satisfied similar listeners.

That said, there are still meaningful steps artists can take to maximize their chances of connecting with the right audiences — the ones most likely to respond positively and reinforce those signals.

What Artists Can Do To Get Recommended on YouTube

To sum up: candidate retrieval combines detailed user and content representations to generate potential items, while ranking determines which actually surface. From a music marketing perspective, the goal is to maximize your chances of entering the right candidate pools — queued after relevant artists or songs — and to perform strongly enough in ranking to reach the right audiences.

A thoughtful, data-informed algorithmic strategy can significantly improve an artist’s discoverability through playlists and recommendation surfaces, amplifying engagement and helping turn casual listeners into fans.

At Music Tomorrow, we’ve spent years helping artists and teams understand how recommendation systems shape discovery — and how to use that knowledge strategically. Our platform maps your algorithmic landscape, identifies playlists and audiences aligned with your sound, and provides insights to strengthen your release strategy.

If you’d like to learn more about our approach, check out our RSO series or case studies — but for those looking for a TL;DR, here’s a quick guide:

A Quick Artist Guide for Getting Recommended on YouTube

1) Before Release: Set the Table

- Define your algorithmic targets: key genres, moods, artists, playlists, and listening contexts. Use Music Tomorrow to understand your existing audiences and potential growth opportunities.

- Nail metadata hygiene: provide complete, accurate data — from titles, tags, and descriptions to distributor pitch forms and lyrics (sourced via MusixMatch/LyricFind).

- Set up your YouTube profiles: create an Official Artist Channel and use YouTube Creator Studio to ensure your profiles across YouTube and YouTube Music reflect your artistic direction and provide a portal into your artist universe.

- Produce high-quality visuals: whether music videos are part of your strategy or not, ensure a consistent aesthetic. A good visualizer or lyric video often performs better on YouTube than a static album cover.

2) Release Week: Win the First Sessions

- Target high-quality audiences that align with your algorithmic goals. Precise, efficient ad targeting is essential for generating collaborative signals and triggering algorithmic recommendations. With Google Ad Cabinet, you can run precisely targeted campaigns directly from the platform — check out our step-by-step guide to learn how to use Music Tomorrow artist clusters to set up effective YouTube ad campaigns.

- Focus on engagement over reach: watch completions, likes, and shares matter more than vanity view-counts.

- If you’re releasing an official video, schedule a YouTube Premiere — and ensure your core fans show up. A successful premiere builds momentum and generates strong early engagement signals — critical to set your video up for algorithmic success.

3) Sustain & Grow: Reinforce the Signal

- Follow up with rich content: remixes, concert footage, behind-the-scenes clips, alternate visualizers, artist vlogs and podcasts. After all, YouTube is a very diverse video platform, so options are almost limitless — keep fans returning for more.

Leverage Shorts: short-form video discovery is now central to music marketing — don’t overlook YouTube Shorts. - Maintain a steady release cadence and stay consistent with audience targeting. Relevant, loyal and engaged viewers are your most valuable resource for educating the algorithm.

4) Avoid at All Costs

- Fake views or vanity campaigns that generate poor engagement.

- Broad, unfocused targeting that confuses the algorithm and distorts collaborative signals.

Use Music Tomorrow to make it actionable.

Map your algorithmic landscape, and get access artist reports with deep audience insights for digital ads targeting, target playlists for pitching, plus a tailored metadata & Spotify feature checklist. New users get one free credit to analyze any artist with a Spotify page.